MIDI part 2: raising some bars

— updated on

This is a continuation of my previous post on MIDI. Now originally I was gonna make two continuation posts, but I think I'll combine it into one long post.

Some notes

So here are some notes of interest I had when writing more covers for my MIDI page. They're not much, but I just thought I'd write it anyway. In advance, sorry this section is so short. I wrote it a long time ago, so the excruciating details might not come back to mind as of yet.

Hymn to Aurora

Drum patch 24 (electronic) is used here to approximate the original samples, assuming the "default MIDI soundset" (as described in the first MIDI post) is used. Unfortunately, since bg-sound operates from Gravis Ultrasound patches (and therefore a GUS approximation of gm.dls), you won't hear this drum set in the browser and instead get the standard one.

Y'know, it always felt kind of odd to me that Roland's definition of an Acoustic Snare in this patch sounded much more like a tom—and so is the bass drum. It's nearly as odd as the presence of two power-pads and sound effects in the GM instrument set.

There is a bit of an oversight in the program changes on one of the echo channels, but I don't feel like fixing it. Now that I let you know, you'll probably gonna want to notice it, if you haven't before.

Ocean Loader 2

What I noticed about this song is that it's got differing time signatures. The intro and the end has a time signature of 3/4, while the main part has a 4/4 time signature. In this case, it's an opportunity to insert time signature meta events.

It's interesting how different players deal with the time signature meta. I believe I inserted it only on the first channel, the same one where tempo changes are defined. Anvil Studio inserts this meta event on every channel, SynthFont 1 ignores the mid-song time signature change, while Rosegarden did just fine.

Starshine

This was a request, and I think I'm happy with it. Generally I've just experimented a little to see what sounds would fit the best for the samples. But these are just approximations. I've said while making this, that the more I work with MIDI the more I find myself sympathizing with Neil Biggin due to its admittedly limited palette, and the electronic instruments being stuck in italo disco forever.

Maybe you should use a different patch for that lead, it sounds different.

Huh? This one sounds pretty close...

Not with my soundfont.

grr...

Also at the time of making, I'd say I've outdone myself here, mainly because the source material is just so rich with sound that even a "watered down" MIDI version of it still sounds pretty nice. It's so fire that Windows Media Player can't even handle the large amounts of polyphony in that thing. ...Then again, that might just be Windows 10's reduced polyphony here.

I also found that the master pitch in FL Studio actually shifts all pitch slides in the MIDI by that amount, which is handy considering all the samples in this module are tuned slightly higher. While making this cover, I actually had to tune down all the samples in the original s3m.

I've thought of using a meta message to set the tuning, but as with everything, I'm not sure if soundfont engines support that. So a global pitch slide it is.

Losers Club, Twitter for Android

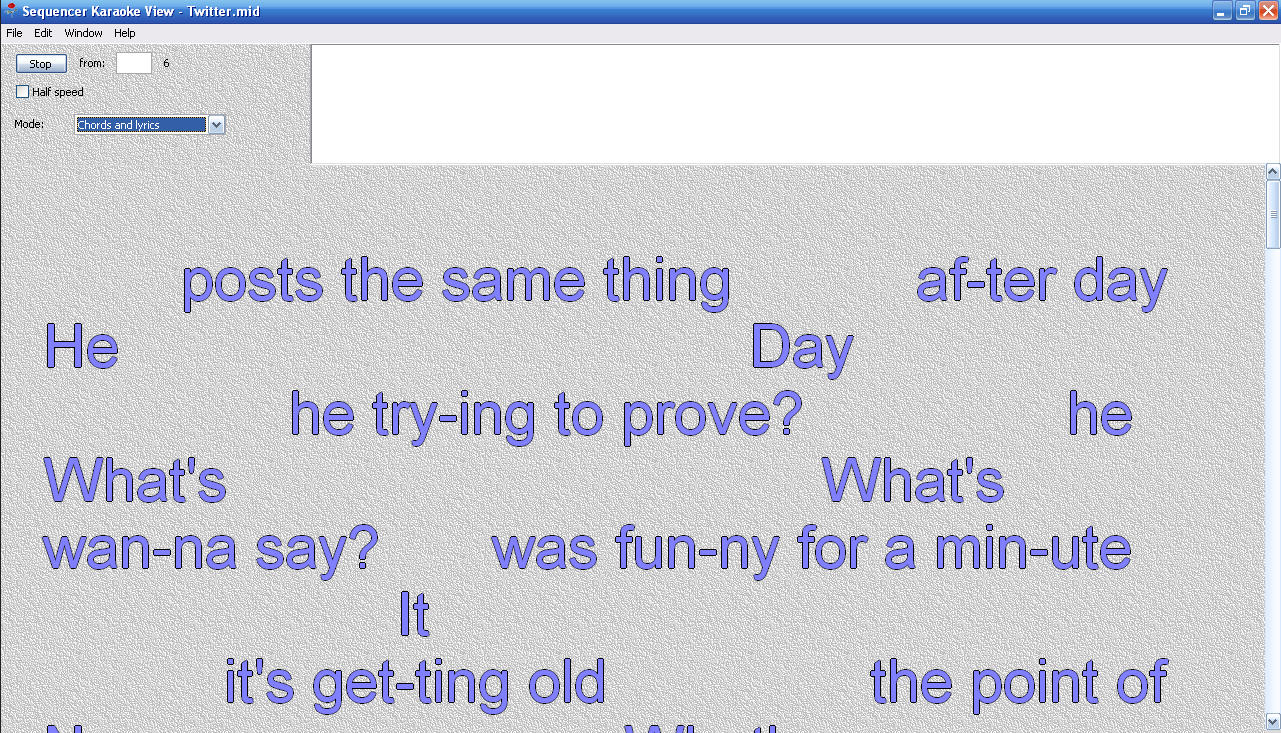

For these ones I looked into MIDI lyrics some more. This isn't the first time I inserted lyrics into MIDI, that one was the cover of Oceania (Literally 1984). I found Rosegarden to be useful here because you can insert syllables for every note in one channel.

As for how it's typed, there's different ways. I tried newlines, dashes after each syllable, spaces... The one that seems to work is "new line before every phrase and spaces after every word". Whether or not dashes after syllables are removed seems to depend on the particular player, but generally I find they lack dashes anyway.

Then I found out several different karaoke formats exist. Oh, MIDI.

SoftKaraoke's first track must be text events that mark it as a KMIDI KARAOKE FILE, and its second track must be lyrical content. (I don't really agree with splitting lyrics onto its own track, would much prefer it being in the same track as the melody...)

For splitting new lines, the SoftKaraoke format specifies the \ (back slash) character should be used.

VanBasco's player seems to have the best tolerance for multiple karaoke formats, though it still acts weird with multiple lyrics channels like the one in the Twitter for Android cover (despite having "allow multiple karaoke channels" checked), since it just mashes the two together leaving a confusing mess. Maybe it's not good practice, anyway.

Meanwhile, Midica isn't quite as "tolerant" as VanBasco. If it doesn't see a SoftKaraoke style header/format it doesn't split the slashes into new lines. It also parses 0x0D \r as "down one line", while 0x0A \n is "down two lines".

The karaoke formats thread had this sad bit that I think can be noted:

I'm not aware of any MMA compliant software apart from my own: Midica.

At least I think that Midica is compliant to RC-026. I cannot test it due to a lack of compliant example files.

Anyway, playing with newlines like that unfortunately also breaks Serenade:

There's another software called WinKaraoke and it has this neat bouncing ball thing, but it seems to be only compatible with SoftKaraoke files. My "karaoke" MIDIs aren't real "karaoke" MIDIs, so no bouncing ball for them. 😔

I did that drum stick 1.. 2.. 1, 2, 3, 4 thing with the Losers Club cover to emulate what a "real" karaoke MIDI would do, but now I know why that was done. Apparently I can't just jump straight into it, because VanBasco cuts the first syllable of the Oceania cover. That didn't happen with TiMidity.

The hidden power of MSGS

Then outta nowhere, there's YAOTA by Kot. Somehow EDM is possible using only the Windows default MIDI synth. Yeah. My initial reaction is that this midi was being made at high speed, everyone else who listened to it thought it was filters. But nope. It was recorded straight outta Windows Media Player. You can't imagine such a thing coming from MIDI, but here we are.

But as it turns out, the MIDI was made at a reasonable BPM (180, to be exact). The real meat however, lies in CC event spam and the wider range of sounds made available through the GS standard (that's the "GS" in "Microsoft GS Wavetable Synth"), of which it takes advantage of through clever sound design. For example:

- There's a bass sound using the machine gun SFX and the baritone sax to emulate rough bass synths.

- Sine waves for kick + click SFX to give it that extra punch.

- Masterful use of pitch bends everywhere. And I do mean everywhere, including the drums.

- Really short note lengths for some other harsh synth sounds.

The end result is pretty mindblowing. But quoting the track's author, "that's nothing compared to HueArme". And indeed, HueArme's work is even more mindblowing, and more so because their entire discography is nothing but MSGS that sounds a lot more like DAW output. Who knew you can squeeze this much out of such a shit synth that even Microsoft left to rot. Here's hoping there's a "MSGS scene" somewhere (probably there already is but it's just really obscure).

Looking at the details

Well, even though I'm nowhere near their levels, I'd thought I'd still try and up my own game. And so I learned some new stuff to add to my MIDI-making toolbox: the expression CC and SysEx.

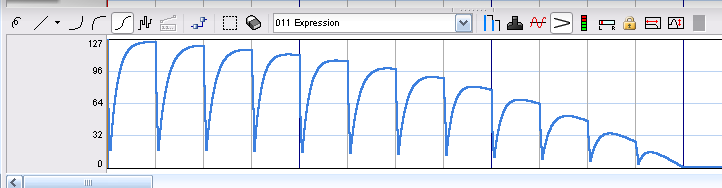

The expression CC (CC #11) is yet another way to control MIDI volume. Unlike velocity, which is set per note, this CC is continuous. Other advantages by using this CC is that you can simply let the track volume be, which saves the headache of having to adjust dynamic track volumes. In this case, you can use it for a sidechaining effect by fading it from 0 to 127 every beat.

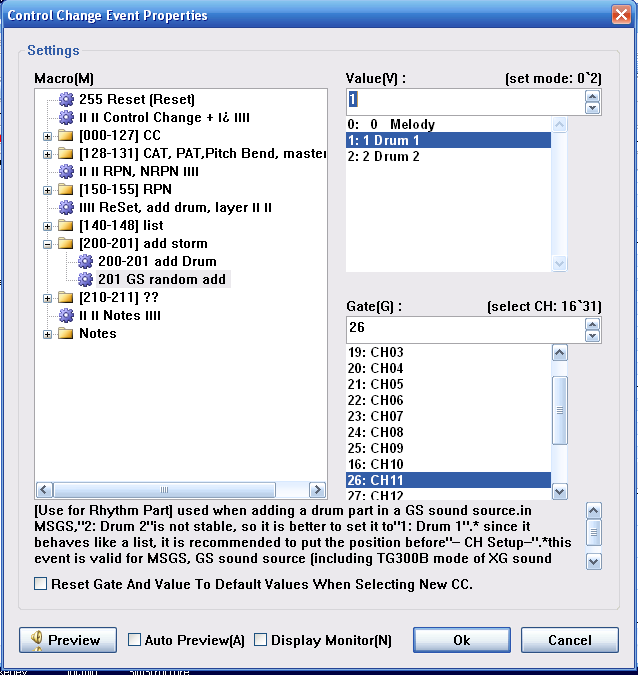

The next thing is SysEx. I've always avoided it since I didn't think it to be all that relevant, but in this case, it is! MSGS actually parses this to permit stuff like drum channels other than ch. 10 and bank switching. And you want that if you want to use the full GS palette.

There are several others that I tried, but apparently not all synths support these:

- The filter CC's, seems to have resonance (CC #71) and cutoff (CC #74). If a synth supports these, even richer sounds can be made, and more opportunity to abuse patches to sound like something they're not.

- Envelope CC's, usually attack (CC #73) and release (CC #72). Assuming the samples in the soundfont or DLS aren't hardbaked to have any envelopes (not a good idea either way), you can modify how fast it fades in. Again, can be useful for helping to make wild sounds out of patches that are not even intended for it.

As far as I know, BASSMIDI VSTi does support these. But not with MSGS. I have yet to test if VirtualMIDISynth has support, but I'm gonna guess it does.

Let's test this out

And now, to apply them. Trying my existing tooling first, here's what I came up with—a short snippet of Carpenter Brut's Roller Mobster. I daisy-chained a bunch of automation clips together and applied it to a bunch of MIDI Out controls. I have two automation clips: one which is just the sidechaining stuff, and then the other which is a slow fade out for the sweeping down SFX.

I added CC #11 to the Midi Out channels I wanted, then using the Link to controller... option I linked the lead channels to the Sidechain automation clip directly. For the sweep channel I linked it to the Sweep automation clip, but not before modifying the Sweep clip by linking its max level to the Sidechain automation channel. In effect, it's like multiplying the Sidechain by the Sweep, because I don't think you can do that directly. I think I did try X-Y controller and the Peak Controller to achieve the same goal, but it just ended up being a barely-working mess.

If I were to draw a rough graph of that setup, it'd look somewhat like this:

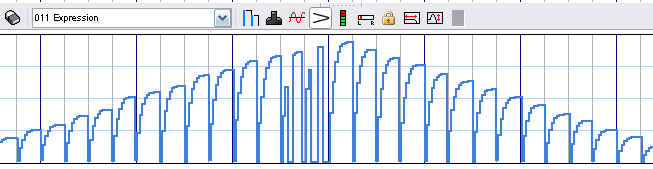

Now, this approach matches what you would actually do if you were making EDM (and not stinky MIDI files). It's quite easy to control. The downside however, is that it will generate very granular CC event spam since each change gets resolved into something like a single tick in the MIDI file. You can see it here, where the output is really smooth:

As for SysEx... Haha. No. Coupled with Midi Out not being able to perform bank switches (you can have only one patch bank for the entire song), this effectively locks me using General MIDI. Which is good for interoperability (and what MIDI is really designed for), but certainly limits the possible timbres you can use. If you're actually creative and don't have any skill issues (unlike me), then you definitely could work with it and make a masterpiece out of it.

For me though, I'm just gonna try something else.

What are my options?

The other choice I have here is Rosegarden, and that's a native app. But Rosegarden is just... weird to work with. I just use it to clean up MIDIs after they've been created with FL Studio and then export it again to be postprocessed with midicsv and back again. I'm not confident enough in using it as a main authoring tool.

And while we're at it, let's talk synths shall we? The only two software synths natively available as a MIDI driver on my computer are TiMidity and FluidSynth. I tried playing YAOTA through both of them. Sadly, neither can seem to deliver GS well.

In TiMidity's case, it can process GS SysEx in file mode, so YAOTA's bank switching and extra drums work. However, it doesn't seem to take them when using it as a MIDI port, so I just hear the basic square chorused lead instead of actual square waves and sine waves. Either that or it's a problem with Wine's midi drivers. Also for some reason, when using any gm.dls-derived soundfont, its drums are at a significantly lower pitch than usual. This one I do know is a problem with TiMidity

As for Fluidsynth, it handles soundfont volumes and pitches better, but I can't get it to read SysEx in midi files even when I forced it somehow (tried synth.midi-bank-select=gs). I haven't tried using it as a MIDI port but I doubt it'll get any better.

Also, as briefly mentioned, Windows 10's MSGS is simply nerfed. Lower polyphony limit and seemingly more lag. VirtualMIDISynth is an excellent substitute, but I wanted the distorted sound of the ancient MSGS.

So, a virtual machine running Windows XP it is.

The first domino falls

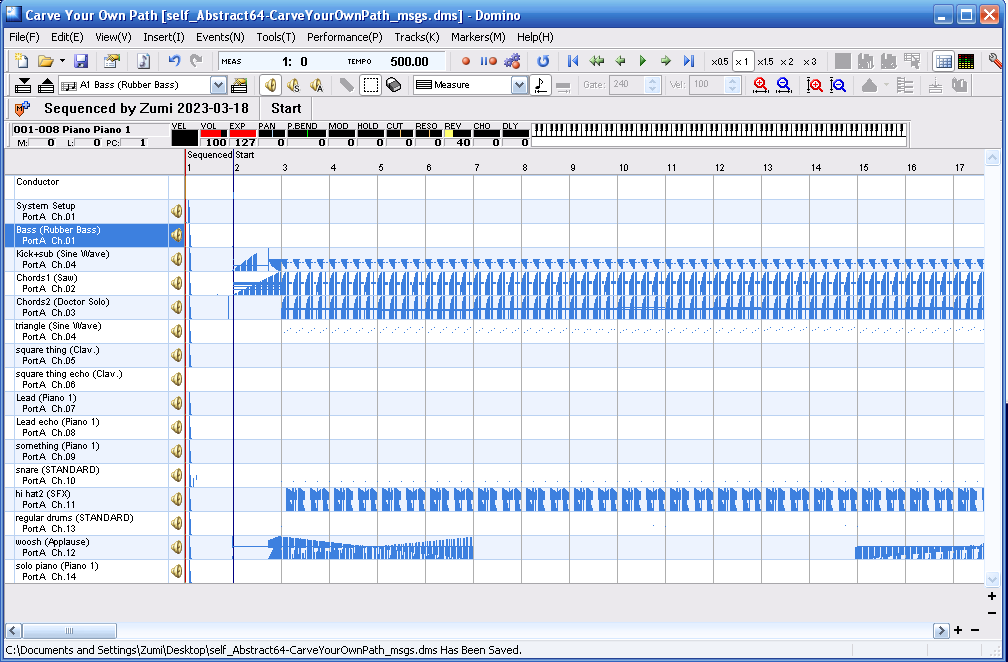

Kot told me they used Domino, a Japanese MIDI authoring tool. I've heard of it before from the Black MIDI community favoring it due to its speed, but Kot did tell me that it does make SysEx handling quite easy.

The first hurdle is finding a translation, since this program's entirely in Japanese. There's the version 1.44 English translation, but apparently the version 1.43 translation is more complete. At first I'd assumed that each translation builds off of each other, but these seem to be separate. Either way, they're really hit-or-miss, but at least I've got some idea of what I'm doing.

The second is, well, just getting used to it. Mostly ways to work with notes. Found some notable features by reading the also best-effort translated manual, like double right-clicking on the piano roll switches you between select mode and drawing mode. Multiselection is done with the Ctrl key. I do find it funny how it can draw multiple selection boxes on top of others, though.

Domino definitely gives off the impression of a first-class MIDI editor. You have the option of drawing several types of curves in the event editor, changing playback speed, and I especially love the "mass change" feature that lets you change values (including notes and tick offsets) by giving it a math expression (say, 60%+10 velocity).

But the real gold is being able to set a "sound source definition", which unlocks the possibility of being able to set SysEx events easily through Domino macros... or whatever it is. When you set a definition through the Midi out settings (F12), it can start a new project with a couple of these already added so you can make use of it immediately. You don't even need to touch any hex data or a MIDI implementation details sheet, just click on the presets on the left of the window (if you can even make out what it's trying to say...). As Kot said, it did make SysEx stuff trivial.

Putting pen to paper

I figured Carve Your Own Path would make an excellent choice to start flexing what I learned. Considering that at the time of creationm Furnace (which is what was used to make it) had no meaningful Real™ export option for Namco 163 (besides wav), so it had to be placed in "allgear", where stuff literally made in DAWs can make it in. So it makes it all the more impressive that it got top 5 in that category.

And yes, the irony of covering a song called "Carve Your Own Path" (which itself already has several really cool covers) is not lost on me.

Seems more like monkey see, monkey do than using creative energy if you ask me.

—Inspector Gadget, "Minecraft with Gadget"

Either way, I was quite determined to make it my test case. Check it out:

I would've put this in my MIDI page, but considering what I said about synths above... yeah. I'll need to wait until I implement something that excludes some tracks from playing in the browser.

For now, uhh... I don't know where to begin, really. Maybe let's go track-by-track. For starters, if you inspect the MIDI yourself, I named each track based on what it's used for, so it can give you some hints as to where to start looking. I'll still explain, for the heck of it:

-

Bass, sidechained rubber bass. Applied the equivalent of a

12 0arp macro on a typical MML or chiptune tracker here. -

Kick+sub, pure sine wave. I'm pretty sure this drives the entire song, as should be the case with any typical EDM (where kicks must take center stage). Whereas YAOTA has the kick and bass in different tracks, I combined both kick and sub bass in one track since the bass is on the off-beats.

I know there's some songs that make use of a reversed kick drum, and they sound great. So I added some here, and I think it works just as well.

-

Chords1, sidechained pure saw wave. Originally I was just gonna leave it as plain notes, but I decided to add a rising pitch bend to the start of each measure to spice things up a bit.

-

Chords2, sidechained pure chorused wave. Most of the time it's a copy of Chords1. I silenced it during the solo to increase the prominence of the piano and bass. It didn't contribute much to the sound at that part anyway.

-

triangle, pure sine wave. Its channel allocation is shared with the Kick+sub channel, so there may be some cuts. Sloppily extended some parts.

-

Square thing, sidechained pure square waves. The clav sounded similar to a 12.5% square wave, so I used that alternating with the 50% square found in bank 1. I had some fun with the panning here. Also applied the arp trick here, too.

-

Square thing echo, sidechained pure square waves. Ditto

-

Lead, multiduty. What it says on the tin. Tried to go a little wild with the pitch bends here, as the original also kinda went to town with it too. I'm happy with what I did with the Expression CC during the solo, was trying to simulate what an actual sax or trumpet player might do, where the volume goes down and then up again.

-

Lead echo, multiduty. Ditto, except delayed by a couple of ticks. During the solo, I removed the delay and made it exactly an octave higher than the lead to act as a second voice.

-

Something, multiduty. Meant as a sort of additional accompaniment. Some parts are inspired by Zyl's cover and others are analogous to components in the original.

-

Snare, percussion. Placed in Ch. 10. Well, this is where the snare was, but I was too lazy to change the name. Instead you have a bunch of clicks to increase prominence of the kick, some claps, and a weird riser made of open triangles (found through trial and error)

-

hi hat2, percussion. Placed in Ch. 11. Hey, here's where the snare went! I tried the same pitch bended drums trick as YAOTA but clearly it's a bit more amateur. But hey, it kinda works.

-

regular drums, percussion. Placed in Ch. 13. Yes, these are everyday boring MSGS drums. Limited to cymbals and hi-hats and the occassional agogo.

-

woosh, sidechained SFX. Woosh indeed. Here, I tried the automation trick described above but manually. First, by drawing the base curve where it rises and falls, and then drawing the sidechain curves manually such that its maximum on every beat corresponds to wherever the base curve falls at that point in time, giving this effect:

-

solo piano, sidechained. Really just repeating the saw chords in a more "interesting" pattern. Sidechain is only half the time, though—I wanted the low notes to be heard, I guess.

There's also the part where all sound stops for a quarter of a beat (made by setting the expression CC to 0), that was also quite interesting to try. Hopefully it adds to the EDM kinda vibe, although at the same time it still sounds very much like a MIDI. Yeah, skill issue on my end here.

And as I've said, the problem with using MSGS to make "impressive" sounds is that it only works with MSGS. And again, VirtualMIDISynth with GMGSx.sf2 or Scc1t2.sf2 can absolutely handle it. I'm afraid I can't say the same with other engines or soundfonts, since... y'know, this isn't what MIDI is designed for.

OpenMPT seems to handle YAOTA fine, but some events have reduced accuracy when I try it with mine, even at the fastest import speed settings. Probably because YAOTA is more polished than my attempt lol.

What now?

Although I said in the last post that I assume everyone's gonna be using Windows Media Player to play my MIDIs, I've always treated MIDI as the "universal standard" it is and... you know, actually consider situations like soundfonts not having any SFX patches, anticipate shitty pitch bend playback (stares at FL Studio), attempting to tag it properly, etc. But the thing is, MIDI is an entire rabbit hole. The fact that there are several different ways of playing the same thing that are "supposed" to sound the same but it doesn't. Several competing standards all based off one file standard based off of a unified protocol.

MIDI definitely ain't no HTML, yet I somehow treat it as such. The parallels of different user agents receiving the same standardized data and what to do with it. Pulling off something with MSGS feels like using Tailwind, tossing print and other media as well as responsive design down the bin, or tagging for the OSM renderer...

But in this case, it's oddly worth it. Like chiptune (think NES, C64, Amiga), you're working with only a few soundsets, and those soundsets only, which gives you some freedom to go absolutely wild with it, with the assurance that it's gonna sound—mostly—the same everywhere. No need to think about how other playback methods may mangle your song, as far as you're concerned it's their problem, not yours.

Overall, I've enjoyed the process, and I'm quite happy with the result. That being said, is a comparison between "Best played with MSGS" and "Best viewed with Internet Explorer Netscape Chrome" fair?